Artificial intelligence regulation has shifted from discussion to enforcement. As enterprises move deeper into generative AI adoption, staying compliant is no longer a matter of good practice — it’s a matter of operational survival.

In 2026, compliance frameworks like the EU AI Act, the NIST AI Risk Management Framework (RMF), and ISO/IEC 42001 will define how organizations design, deploy, and monitor AI systems. The challenge for enterprise teams isn’t only to understand these regulations, but to translate them into architectural, governance, and procurement decisions that keep projects viable under regulatory scrutiny.

Below, we outline what’s changing, how the EU Artificial Intelligence Act will reshape enterprise AI operations, and what architectural steps ensure long-term compliance.

The EU AI Act: From Theory to Enforcement

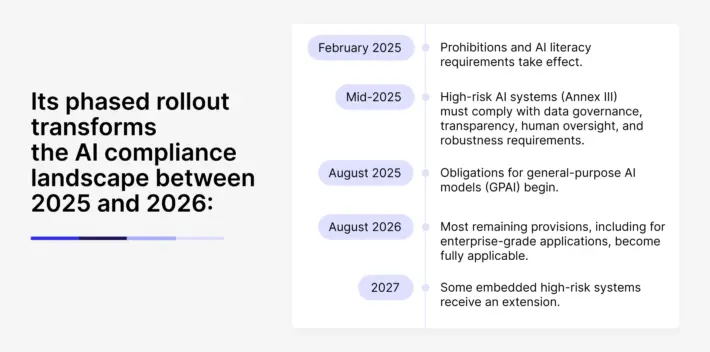

The EU AI Act officially came into force on August 1, 2024, marking the world’s first comprehensive legal framework for artificial intelligence.

By 2026, the EU AI Act implementation will apply to nearly every organization deploying or selling AI systems in the European market. Compliance will determine not only whether systems can stay online, but whether enterprises can continue operating within EU jurisdictions.

Every layer of the AI architecture — from data pipelines to model evaluation — must prove accountability, explainability, and risk control.

Architectural Choices Under Regulatory Pressure

Architectural choices for AI implementation in 2026 are no longer driven solely by accuracy or efficiency. They are shaped by survivability under regulatory scrutiny.

Under the EU AI Act overview, enterprises must demonstrate:

- Full data lineage tracking — knowing exactly what datasets contributed to each model’s output.

- Human-in-the-loop checkpoints — for workflows impacting safety, rights, or financial outcomes.

- Risk classification tags — labeling each model with its risk level, usage context, and compliance status.

Failure to demonstrate these controls isn’t just an administrative issue. It can lead to forced shutdowns of production of AI systems or bans on EU market access. For this reason, forward-looking teams are designing AI stacks that make compliance observable, not assumed.

The NIST AI Risk Management Framework: U.S. Alignment

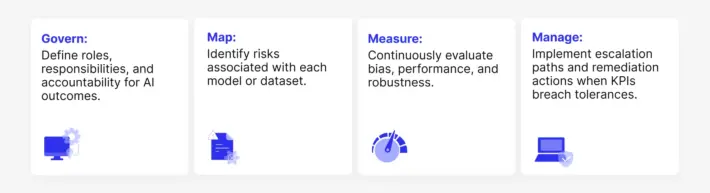

Across the Atlantic, the NIST AI RMF has evolved into the de facto standard for AI governance in U.S. federal agencies and regulated industries. Its four pillars — Govern, Map, Measure, and Manage — now serve as procurement criteria for vendors and partners.

In practical terms, aligning with the NIST AI RMF means integrating its principles into the enterprise AI lifecycle:

Architectural implications include:

- Red-teaming environments for adversarial testing before deployment.

- Bias detection pipelines feeding into centralized evaluation harnesses.

- Automated escalation playbooks activate when safety or performance thresholds are exceeded.

Together, the EU AI Act and NIST RMF create a dual framework that enterprises must reconcile one emphasizing regulatory evidence, the other operational maturity.

Mapping Security, Compliance, and Regulatory Controls in AI

Security and compliance requirements now drive architecture from the very first design session.

Core controls include:

- Identity and access management — ensuring each AI action is linked to an authenticated, authorized user.

- Data handling and deletion — proving the ability to mask sensitive data and execute verified deletion.

- Logging and traceability — maintaining signed logs that tie every model output to its source material, model version, and governing policy.

These measures collectively address three dominant enterprise AI risks:

- Data leakage – minimized through private connectivity and data masking.

- Unauthorized actions – prevented via real-time role checks and workflows approval.

- Weak accountability – resolved through immutable audit trails and signed logs.

Private network connectivity is a cornerstone of this strategy. Major cloud providers now support private endpoints for regulated workloads:

- AWS Bedrock via PrivateLink

- Azure AI and Azure OpenAI via Private Endpoints

- Google Vertex AI via Private Service Connect

These features allow enterprises to use vendor-hosted AI services without exposing traffic to the public internet — a non-negotiable condition for compliance under EU AI regulations and ISO/IEC 42001 standards.

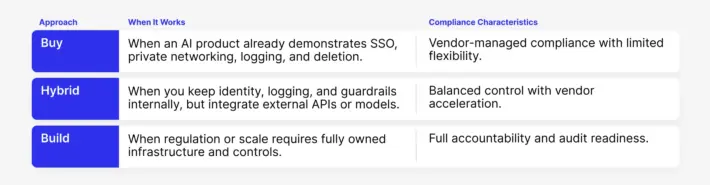

Buying AI, Building AI, or Choosing Hybrid AI Solution: Compliance-Driven Architecture Choices

The 2026 enterprise AI landscape revolves around three operating models — Buy, Hybrid, and Build — each corresponding to distinct compliance strategies.

Enterprises are also expected to maintain three key compliance deliverables:

- Control catalog — listing each safeguard and how it is enforced at runtime.

- Compliance matrix — mapping control to EU AI Act, NIST RMF, and ISO/IEC 42001 clauses.

- Risk register — identifying owners, mitigations, and evidence for risks such as data leakage or unauthorized actions.

These artifacts turn “governance” from an abstract concept into something tangible — a live system that regulators and auditors can inspect.

AI Compliance in Practice

Before launching a proof of concept (PoC), enterprises must prove that controls function in runtime. In the 2026 compliance environment, screenshots and declarations are no longer sufficient — only operational evidence counts.

Checklist for AI compliance validation:

- Network isolation: Demonstrate private connectivity to model endpoints (e.g., packet trace without public egress).

- Data handling: Show logs linking outputs to source data, model versions, and user prompts; conduct a verified deletion test.

- Safety: Demonstrate both blocked and approved cases of output moderation or escalation.

These runtime proofs align with EU Artificial Intelligence Act requirements for record-keeping and traceability, and with NIST RMF standards for measurement and governance.

PoCs lacking this evidence won’t scale to production in regulated markets like the EU or in industries such as finance, healthcare, or public administration.

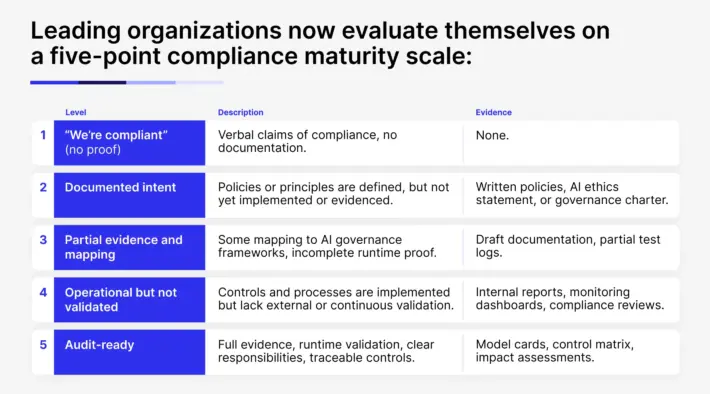

AI Governance as a Continuous Function

In 2026, compliance is not a checkbox; it’s a continuous governance function embedded in product operations.

Leading organizations now evaluate themselves on a five-point compliance maturity scale:

Achieving level 5 means being ready for audits under the EU AI Act, NIST RMF, and ISO/IEC 42001, while avoiding the operational risks of non-compliance — from blocked launches to suspended systems.

Discover how AI will reshape your industry in 2026 with Sombra’s AI Prediction Tool

Get your predictionWhy AI Compliance Now Defines Competitive Advantage

While the AI Act EU focuses on compliance, its secondary effect is differentiation. Vendors and enterprises that can prove compliance will dominate regulated industries, winning contracts where others face delays or exclusions.

Buyers are already adapting their roadmaps to the EU AI Act implementation timeline. They are:

- Aligning capability rollouts with enforcement phases.

- Investing in audit-ready model cards and incident response systems.

- Embedding Data Protection Impact Assessments (DPIAs) and AI Impact Assessments into PoC workflows.

This proactive stance doesn’t just mitigate fines — it accelerates go-to-market timelines. Enterprises that can evidence compliance from day one move faster through procurement, legal, and risk review cycles.

In short: regulation is no longer the barrier to innovation. It’s the blueprint for sustainable deployment.

Beyond the EU AI Act: Global Convergence in AI Governance

While the EU AI Act sets the pace, similar regulations are emerging globally.

The AI in Government Act in the U.S., Canada’s AIDA, and Japan’s AI Governance Guidelines all share foundational principles: transparency, risk-based classification, and accountability.

In Europe, the EU AI Act updates are already influencing vendor roadmaps and partner contracts. In the U.S., the NIST RMF and executive AI orders shape federal adoption. Together, they form a converging global governance model that will define how enterprises design and monitor AI systems through 2030.

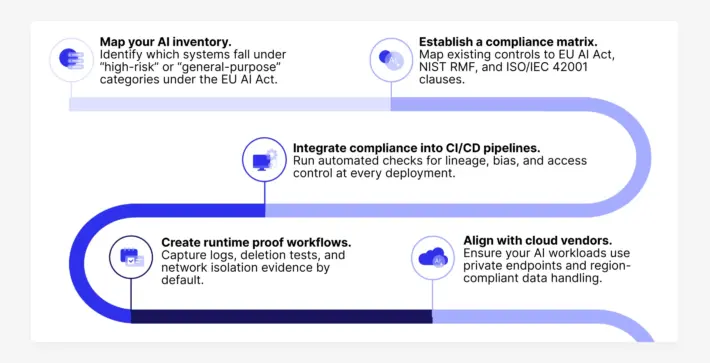

Preparing for AI Governance & Regulations in 2026: Practical Steps for Enterprises

As 2026 approaches, enterprises should treat compliance as a technical and strategic priority. Key next steps include:

Enterprises that begin this work now will reach 2026 with confidence — able to prove compliance not only on paper, but in production.

Conclusion: AI Regulations as the New Architecture Layer

AI regulations have matured from theoretical guidance to operational necessity. In 2026, the EU AI Act, NIST AI RMF, and ISO/IEC 42001 will jointly define what “responsible AI” means in practice.

Enterprises that embed these frameworks directly into architecture and governance will not only stay compliant — they’ll stay competitive.

Regulation, once seen as a barrier, is becoming the scaffolding of resilient AI systems.

Future-Proof Your Enterprise with AI Governance and Regulations

Our new e-book, Off-the-Shelf vs. Custom AI: What Works in 2026, explores how regulatory and operational pressures are shaping enterprise AI architectures — from compliance-by-design strategies to practical governance frameworks.

Download the e-book to learn how to build AI systems that thrive under the new rules.