AI Adoption in 2026: Cost Models Explained

By 2026, enterprise AI adoption will have moved beyond experimentation. Organizations face decisions that combine speed, cost, regulatory compliance, and long-term strategic advantage. The classic “buy vs build” question is no longer a binary choice. Instead, it involves analyzing multiple structural factors:

- Proprietary data advantage

- Latency and performance requirements

- Intellectual property and differentiation needs

- Regulatory compliance and audit obligations

- Cost dynamics over multiple years

Understanding these dimensions allows enterprises to select the most sustainable and cost-effective AI deployment strategy.

Proprietary Data as a Strategic Asset

Proprietary data remains the single most decisive factor in AI adoption. Enterprises with exclusive, high-quality, large-scale datasets can extract far greater value from custom AI development. In these cases, AI functions as a compounding asset, where every model iteration:

- Improves predictive performance

- Strengthens competitive moats

- Enhances business insights

In sectors such as finance, insurance, and industrial automation, building proprietary AI models is often essential to maintaining differentiation.

Conversely, organizations with limited or commoditized datasets benefit more from buying pre-trained AI models. Vendor solutions, particularly in regulated domains like healthcare, may deliver measurable outcomes faster than internal development, often with built-in compliance certifications.

Latency and Operational Requirements

Latency considerations influence whether an enterprise should build or buy. Use cases such as algorithmic trading, real-time fraud detection, or safety-critical industrial automation require millisecond-level responsiveness. While vendor infrastructure has improved, shared services still carry unavoidable latency. In these scenarios, owning the serving stack or deploying specialized edge models ensures operational reliability and compliance with service-level expectations.

Intellectual Property and Competitive Advantage

AI can be a differentiating asset when it underpins unique capabilities, such as proprietary risk models, recommendation engines, or specialized generative systems. Dependence on external vendors introduces risks:

- Reduced control over strategic algorithms

- Exposure to vendor roadmaps

- Potential loss of IP exclusivity

In such cases, internal development or co-development with joint-IP agreements secures long-term control, even if it requires higher initial investment.

Break-even custom builds typically occur in year three or later, depending on sustained usage and architecture stability. Subscription-based models often remain more economical for exploratory or low-volume workloads.

Regulatory Compliance and Governance Considerations

In 2026, AI adoption is tightly regulated. Frameworks and standards include:

- EU AI Act

- NIST AI Risk Management Framework

- ISO/IEC 42001

- UK Responsible AI Guidance

- Sector-specific rules in finance, healthcare, and government

Vendor certifications can simplify adoption, but in jurisdictions with strict sovereignty, audit, or explainability requirements, internal or hybrid control is often necessary. Regulatory obligations can outweigh the speed advantage of buying, particularly for mission-critical applications.

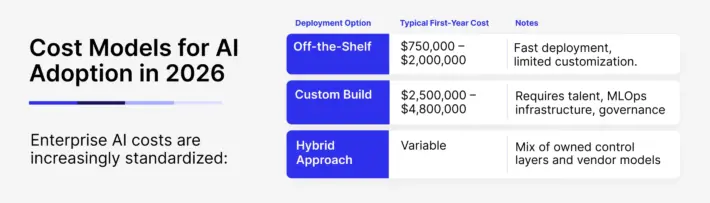

Deployment Strategies: Buy, Build, or Hybrid

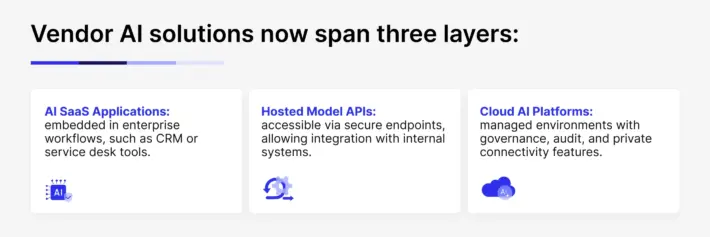

Buying AI: Speed with Governance

Buying is effective when processes are standardized, speed-to-value is critical, internal teams are still developing AI capabilities, and compliance can be contractually managed.

Consider buying if:

- Existing workflows align with vendor solutions

- Impact is required within weeks

- Internal AI engineering capacity is limited

- Usage is moderate, and cost scales favorably

Building AI: Full Control and Differentiation

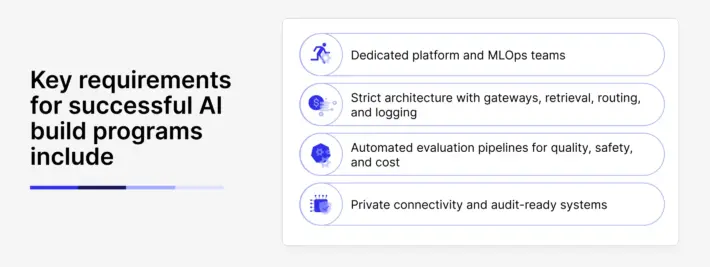

Building AI internally gives organizations control over the full lifecycle, from design to evaluation and compliance.

Build is optimal when:

- Proprietary data offers strategic advantage

- Latency and throughput requirements are stringent

- AI output represents IP or core differentiation

- Regulatory compliance demands full in-house control

- Scaling economics favor internal infrastructure

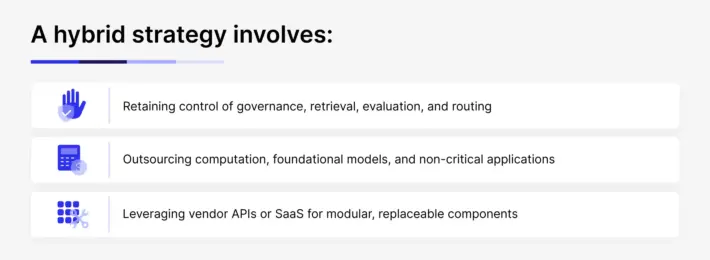

Hybrid Adoption: Owning the Core, Renting the Rest

Hybrid adoption has become the default for enterprises balancing speed and control.

Hybrid works when:

- Sensitive or high-value data must remain in-house

- Flexibility over time is required, e.g., scale, cost, or compliance evolution

- Workloads vary in control needs, from standard SaaS tasks to mission-critical operations

- Avoiding vendor lock-in is strategically important

Typical hybrid patterns include Data-Owned RAG, Fine-Tuned Edge Models, and Orchestrated Agents, with the core control plane maintained internally.

AI Adoption Guidelines in 2026

Rule of thumb: prioritize building or hybrid control if three or more conditions apply:

- Proprietary data drives competitive advantage

- Regulations require strict auditability or local residency

- Latency or throughput requirements exceed vendor limits

- AI algorithms are part of IP or core differentiation

- Vendor costs scale unfavorably with projected usage

- Full control over AI lifecycle is strategically required

Otherwise, buying AI with structured governance offers speed and predictability.

Conclusion: AI as Strategic Enterprise Infrastructure

By 2026, AI adoption success is measured not by model count or size, but by governance, control, auditability, and adaptability. Adopting AI is no longer a simple procurement or engineering choice — it is a strategic operating model that requires alignment of technical, economic, and regulatory factors.

Next Step: Access the Full AI 2026 Guide

For detailed cost benchmarks, reference architectures, and regulatory-aligned AI adoption strategies, download Sombra’s 2026 Enterprise AI e-book.